INTELLIGENCE REPORT:

AI

HALLUCINATIONS

EXECUTIVE SUMMARY

CRITICAL

What happens when ChatGPT lies about your business? AI models "hallucinate" when they lack sufficient data. If your brand's digital footprint is weak or contradictory, LLMs may invent facts, pricing, or services that you don't offer. This is a major reputation risk.

DATA CONSISTENCY

THE ANTIDOTE

We flood the digital ecosystem with consistent, verified data about your brand. By establishing a strong "Knowledge Graph" presence, we reduce the probability of AI error to near zero.

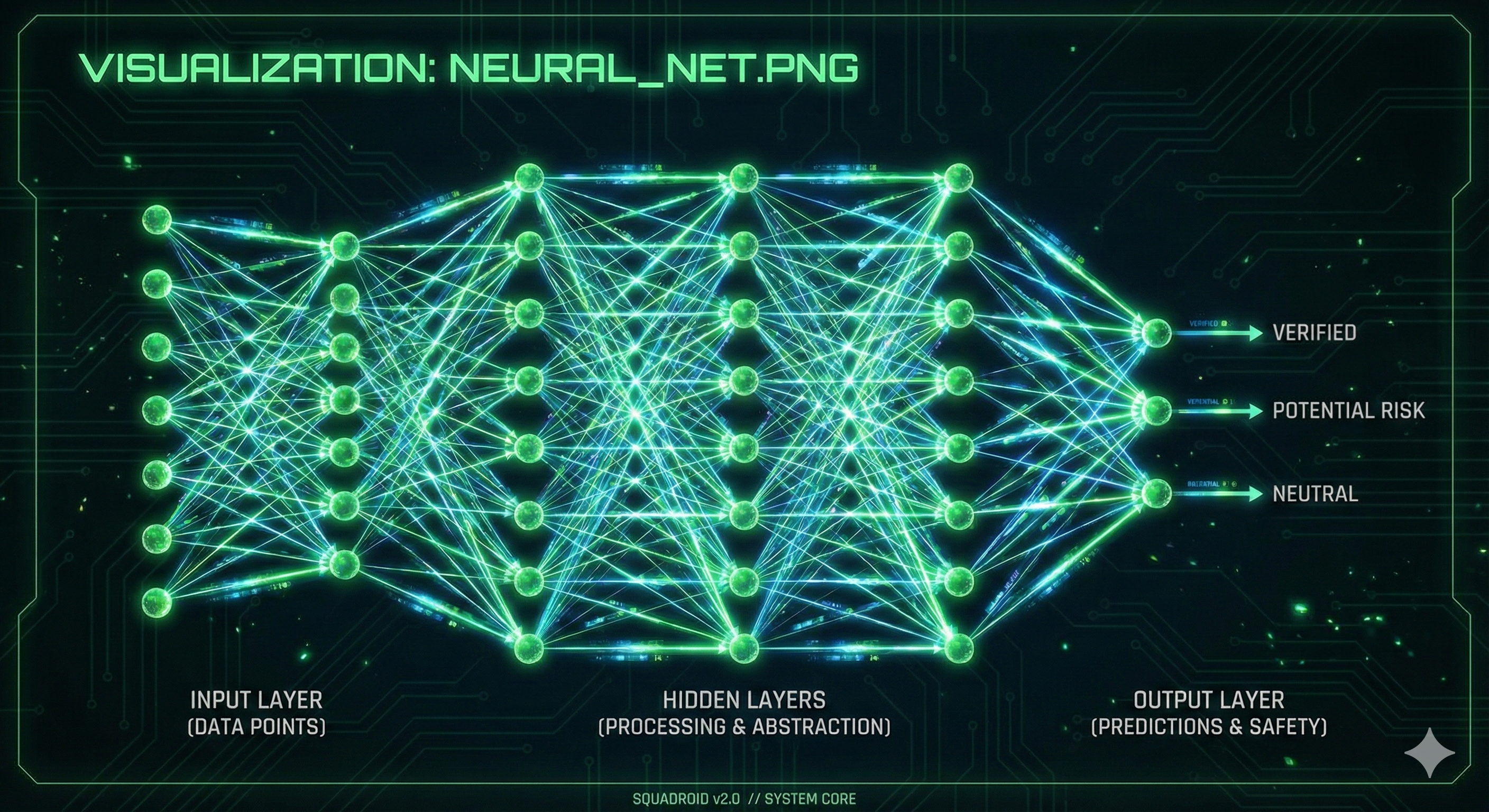

> VISUALIZATION: NEURAL_NET.PNG

DEFENSE PROTOCOLS

PROTECTION MEASURES

ACTIVE

-

> WIKI_OSINT

Ensuring your brand is correctly represented in open knowledge bases like Wikidata and Wikipedia. -

>

REVIEW_MONITORING

Actively monitoring user feedback to provide context to AI crawlers and minimize negative sentiment drift. -

>

TECHNICAL_HARDENING

Fixing conflicting schema markup on your site that confuses the bots.